The real-world testing of AR prototypes will help Google better understand their usage in everyday lives.

Google will start testing in the real world its AR-powered glasses that offer real-time language translation from next month. The seemingly Google Glass successor that comes with a simple design is one of the several augmented reality prototypes that the Mountain View, California-based company will be testing in the real world. The company says that this is because testing only in a lab environment has limitations and testing in the real world will allow it “to better understand how these devices can help people in their everyday lives.”

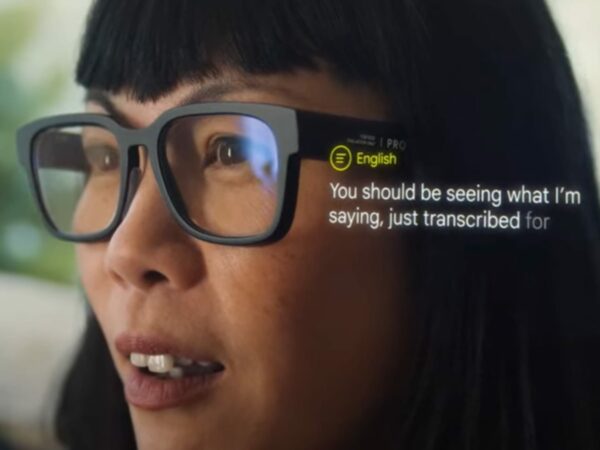

As per a blog post by Google, the company is developing experiences like AR navigation that will help the company “take factors such as weather and busy intersections into account — which can be difficult, sometimes impossible, to fully recreate indoors.” One of the early AR prototypes is a pair of simple spectacles, which the company has been testing in its labs. The specs offer real-time translation as well as transcription and directly put it on the lens. The spectacles were teased by the company at Google I/O 2022.

These research prototype glasses offer experiences such as translation, transcription, and navigation. They look like normal glasses, feature an in-lens display, and have audio and visual sensors, such as a microphone and camera. “We will be researching different use cases that use audio sensings, such as speech transcription and translation, and visual sensing, which uses image data for use cases such as translating text or positioning during navigation. The AR prototypes don’t support photography or videography, though image data will be used to enable use cases like navigation, translation, and visual search,” the company said.

In simpler terms, for example, if you are in a country where you do not understand the local language, you can use the camera to translate the writings on the boards in front of you or you can get directions to a nearby restaurant right in front of your line of sight — on the glasses.

Google says that the image data is deleted after the experience is completed, except in cases where the image data will be used for analysis and debugging. In such scenarios, the image data will be first scrubbed for sensitive content, such as faces and license plates, the company says. “Then it is stored on a secure server, with limited access by a small number of Googlers for analysis and debugging. After 30 days, it is deleted,” Google notes. Moreover, an LED indicator will turn on if image data is being saved for analysis and debugging. Bystanders can ask the tester to delete the data if they wish so.

Juston Payne, Group Product Manager at Google, said that the company will begin small-scale testing in public settings in the US. These places exclude schools, government buildings, healthcare locations, places of worship, social service locations, areas meant for children, emergency response locations, rallies or protests, and other similar places.

[Source=gadgets360]

Leave a Comment